I propose to consider the question, 'Can machines think?'

This should begin with definitions of the meaning of the terms 'machine' and 'think'.

The definitions might be framed so as to reflect so far as possible the normal use of words,

but this attitude is dangerous.

If the meaning of the words 'machine' and 'think' are to be found

by examining how they are commonly used

it is difficult to escape the conclusion that the meaning

and the answer to the question, 'Can machines think?'

is to be sought in a statistical survey such as a Gallup poll. But this is absurd.

- A. M. Turing's (1950, p. 433) 'Computing Machinery and Intelligence'

Acting Humanly |

|

|---|---|

|

|

|

|

According to the Behaviorist Conception of Intelligence:

|

|

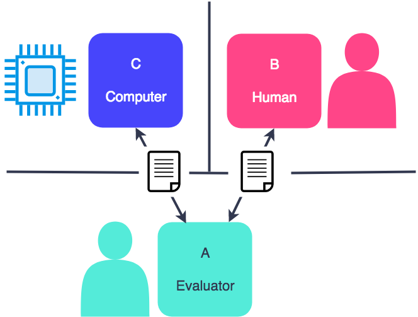

According to the Turing Test or TT: A machine is in the 1st room A person is in the 2nd room A human judge is in the 3rd room

Both the machine and the person respond by teletype to remarks made by a human judge in the 3rd room for some fixed period of time (e.g. an hour) The machine passes the TT just in case the judge cannot tell which are the machine's answers and which are those of the person |

|

|

According to the Turing Syllogism: P1: If an entity passes the TT, then it produces a sensible sequence of verbal responses to a sequence of verbal stimuli. P2: If an entity produces a sensible sequence of verbal responses to a sequence of verbal stimuli, then it is intelligent. — see the Behaviorist Conception of Intelligence C: ∴ If an entity passes the TT, then it is intelligent. Formally:

Most simplifications of the TT involve attempting to have a computer in the other room fool an interrogator into believing the computer is a human Hugh Loebner's Grand Prize of $100,000 and a Gold Medal have been reserved for the 1st computer whose responses are indistinguishable from a human's |

|

EXAMPLE 1 of a conversational program designed in accordance with the acting humanly approach: ELIZA ELIZA was coded at MIT by Joseph Weizenbaum in the 1960s ELIZA was a very simple program: it was constituted by 200 lines in BASIC ELIZA could imitate a psychotherapist by employing a small set of strategies In the guise of a psychotherapist, ELIZA could adopt the pose of knowing almost nothing of the real world

Adapted from the js code for ELIZA by George Dunlop: http://psych.fullerton.edu/mbirnbaum/psych101/eliza.htm |

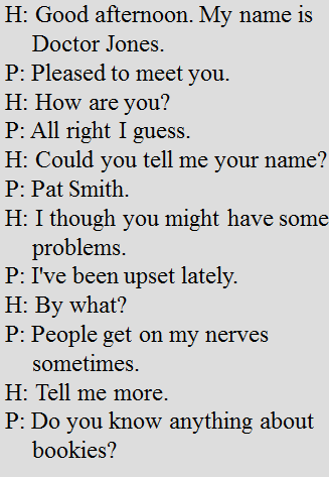

Key: 'H' denotes 'Human' 'P' denotes 'PARRY' |

EXAMPLE 2 of a conversational program designed in accordance with the acting humanly approach: PARRY PARRY was coded at Stanford by Kenneth Colby in the 1960s PARRY could imitate a paranoid schizophrenic Expert psychiatrists were unable to distinguish PARRY's ramblings from those of human paranoid schizophrenics Colby described PARRY as 'ELIZA with attitude'

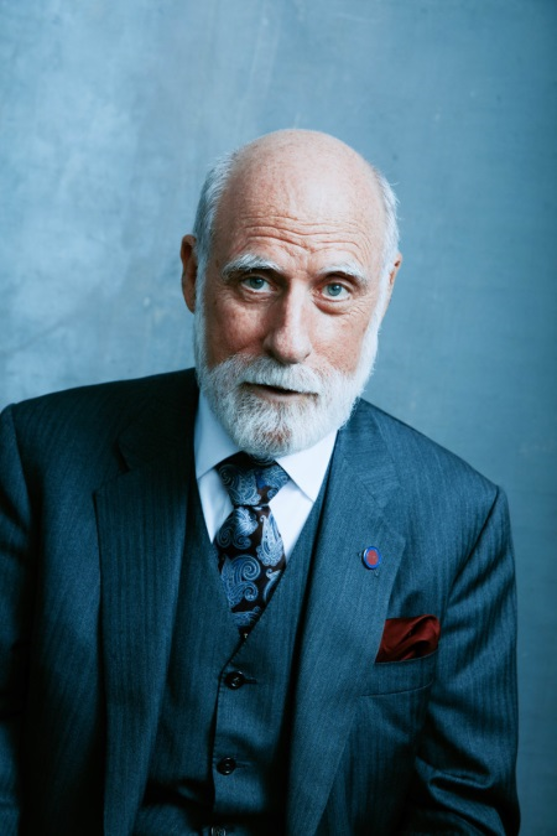

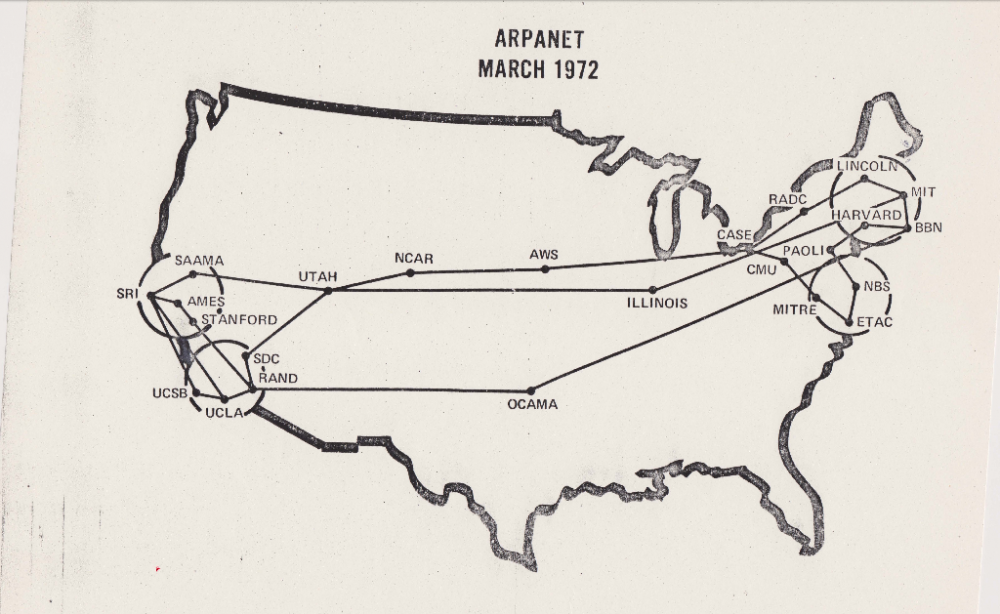

In 1972, Vint Cerf set up a conversation between ELIZA (based at MIT) and PARRY (based at Stanford) Cerf used ARPANET, an early packet switching network that later became the technical foundation of the Internet

|

|

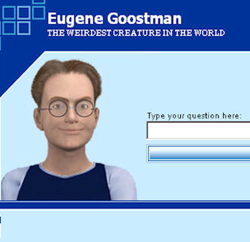

EXAMPLE 3 of a conversational program designed in accordance with the acting humanly approach: Eugene Goostman Eugene Goostman was a chatbot developed by three programmers, Vladimir Veselov, Eugene Demchenko, and Sergey Ulasen In 2014, Eugene Goostman was supposed to have passed the TT by fooling 33% of the judges into thinking it was human 2014 marked the 60th anniversary of Alan Turing's death Eugene Goostman could imitate a 13-year-old Ukrainian boy In the guise of a 13-year-old Ukrainian boy, Eugene Goostman could get away with its grammatical errors and lack of general knowledge Have a conversation with Eugene Goostman: http://eugenegoostman.elasticbeanstalk.com/ |

Background image taken from: https://cdn.asiatatler.com/asiatatler/i/th/2020/02/04101225-aurora-1185464-1920_cover_1920x1280.jpg This website has been coded using html, css, and js and is dedicated to B and H .