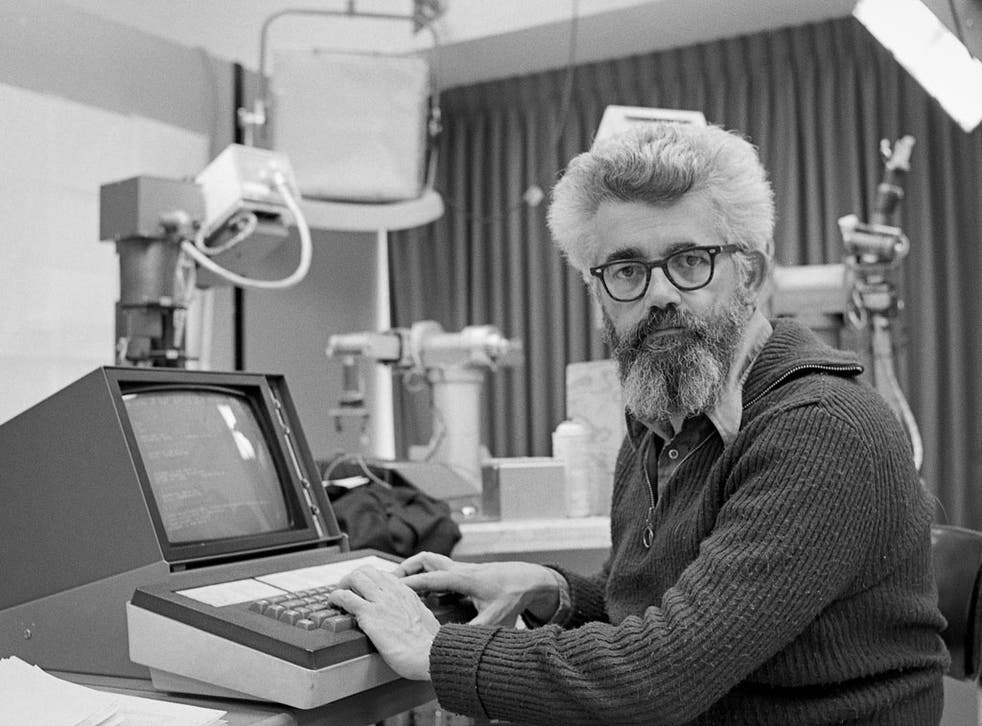

I propose to consider the question, 'Can machines think?'

This should begin with definitions of the meaning of the terms 'machine' and 'think'.

The definitions might be framed so as to reflect so far as possible the normal use of words,

but this attitude is dangerous.

If the meaning of the words 'machine' and 'think' are to be found

by examining how they are commonly used

it is difficult to escape the conclusion that the meaning

and the answer to the question, 'Can machines think?'

is to be sought in a statistical survey such as a Gallup poll. But this is absurd.

- A. M. Turing's (1950, p. 433) 'Computing Machinery and Intelligence'

Thinking Rationally |

|||||||||

|---|---|---|---|---|---|---|---|---|---|

|

|

|

||||||||

|

According to the Physical Symbol System Hypothesis or PSSH (Newell & Simon, 1976): A physical symbol system has the necessary and sufficient means for intelligent action The 2 most important classes of physical symbol systems with which we are acquainted are human beings and computers

IMPLICATIONS of the PSSH: The symbolic behaviour of human beings arises because we have the characteristics of a physical symbol system General intelligent action calls for a physical symbol system Appropriately programmed computers would be capable of intelligent action Intelligent systems (natural or artificial) are effectively equivalent

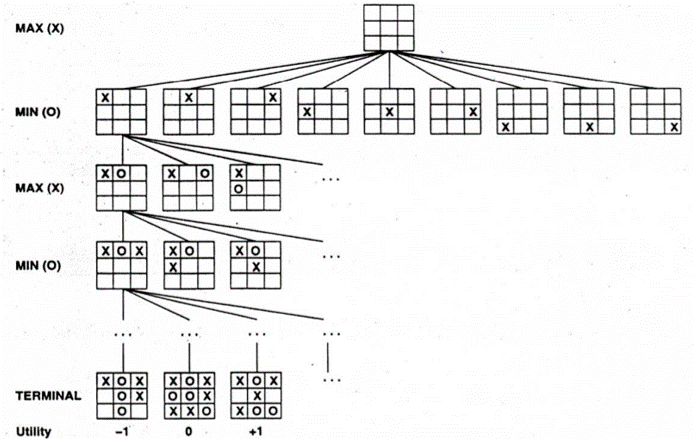

According to the Heuristic Search Hypothesis or HSH (Newell & Simon, 1976): Physical symbol systems solve problems using the processes of heuristic search Solutions to a problem are represented as symbol structures A physical symbol system exercises its intelligence in problem-solving by searching until the symbol structures of solutions are produced

|

||||||||

|

According to the Logicist Manifesto (Bringsjord, 2008): A person is the bearer of propositional attitudes:

The basic units are propositions or declarative statements (denoted by propositional variables p, q, etc) that convey propositional content Propositions can carry such values as TRUE, FALSE, PROBABLE, UNKNOWN, etc The basic processes over units of inference are the modes of reasoning (viz. deductive, inductive, abductive, analogical, etc) Logic-based AI has three attributes:

|

||||||||

|

EXAMPLE 1: Logic Theorist or LT (Newell, Shaw, & Simon, 1957) LT is a logical theorem-proving program capable of providing proofs in propositional logic LT managed to prove 38 of the first 52 theorems in Whitehead & Russell's (1910, 1912, 1913) Principia Mathematica

LT was able to provide a more elegant proof for a logical theorem (THEOREM 2.85) than the one found in Whitehead & Russell (1910, 1912, 1913) THEOREM 2.85: ((p ∨ q) → (p ∨ r)) → (p ∨ (q → r)) Simon claimed to have solved the mind-body problem with LT However, the editors of the Journal of Symbolic Logic rejected a paper co-authored by Newell, Simon, and LT |

||||||||

|

EXAMPLE 2: Advice Taker or AT (McCarthy, 1959) AT is program designed by John McCarthy and Marvin Minsky for solving problems by manipulating sentences in a formal language AT will draw immediate conclusions from a premise set The conclusions will be either declarative or imperative sentences

|

||||||||

Background image taken from: https://cdn.asiatatler.com/asiatatler/i/th/2020/02/04101225-aurora-1185464-1920_cover_1920x1280.jpg This website has been coded using html, css, and js and is dedicated to B and H .